THE ANGELS – An AI researcher developed a free speech alternative to ChatGPT and argued that the main model has a liberal bias that prevents it from answering certain questions.

“ChatGPT is politically motivated and it shows through the product,” said Arvin Bhangu, who founded the Superintelligence AI model. “There’s a lot of political bias. We’ve seen where you can ask me to give me 10 things that Joe Biden has done right and give me 10 things that Donald Trump has done right and he refuses to give quality answers for Donald Trump.” .

“Superintelligence is much more in line with the freedom to ask any kind of question, so it’s much more in line with the First Amendment than ChatGPT,” Bhangu said. “No prejudice, no barriers, no censorship.”

AI RESEARCHER ASSIGNS HIS MODEL ANSWERS ANY QUESTION:

WATCH MORE FOX NEWS DIGITAL ORIGINALS HERE

ChatGPT, an AI chatbot that can write essays, code and more, has been criticized for having politically biased answers. There have been numerous instances where the model refused to provide answers, even bogus ones, that might give conservatives a positive spin, but would follow suit if the same cue was sent about a liberal.

“Unfortunately, it’s very difficult to deal with this from a coding standpoint,” Flavio Villanustre, global chief information security officer at LexisNexis Risk Solutions, told Fox News in February. “It’s very difficult to prevent biases from occurring.”

But AI’s full potential will only be realized when models can provide authentic and unbiased answers, according to Bhangu.

“Presenting an answer to the user and letting them determine what’s right and wrong is a much better approach than trying to filter and trying to control the Internet,” he told Fox News.

Elon Musk has been outspoken about the dangers of AI, saying it could cause civilizational threats if left unregulated. (Justin Sullivan/Getty Images)

CHATBOT “HALLUCINATIONS” PERPETUATE POLITICAL FALSENESS, BIAS THAT HAVE REWRITE AMERICAN HISTORY

OpenAI, the company that developed ChatGPT, is “training AI to lie,” Elon Musk told Fox News last month. He also hinted in a tweet that he might sue OpenAI, appearing to agree that the company defrauded him.

Additionally, George Washington University professor Jonathan Turley said ChatGPT made up sexual harassment claims against him and even cited a fake news article.

ChatGPT also didn’t generate a New York Post-style article, but did write an article modeled after CNN, prompting further criticism of the platform as showing bias.

Bhangu said ChatGPT’s biases damaged the credibility of the AI industry.

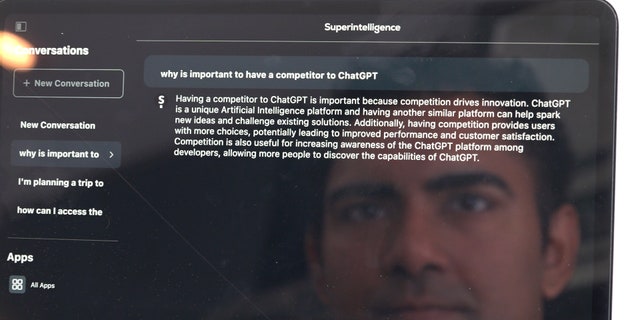

Bhangu explores “why it’s important to have a competitor for ChatGPT,” in his Superintelligence AI model. (Fox News Digital/Jon Michael Raasch)

CLICK HERE TO GET THE FOX NEWS APP

“ChatGPT’s biases can have a damaging effect on the credibility of the AI industry,” he said. “This could have far-reaching negative implications for certain communities or individuals who rely heavily on AI models to make important decisions.”

OpenAI did not respond to a request for comment.

To watch the full interview with Bhangu, click here.

Jon Michael Raasch is a producer/associate writer for Fox News Digital Originals.

[ad_2]

Source link